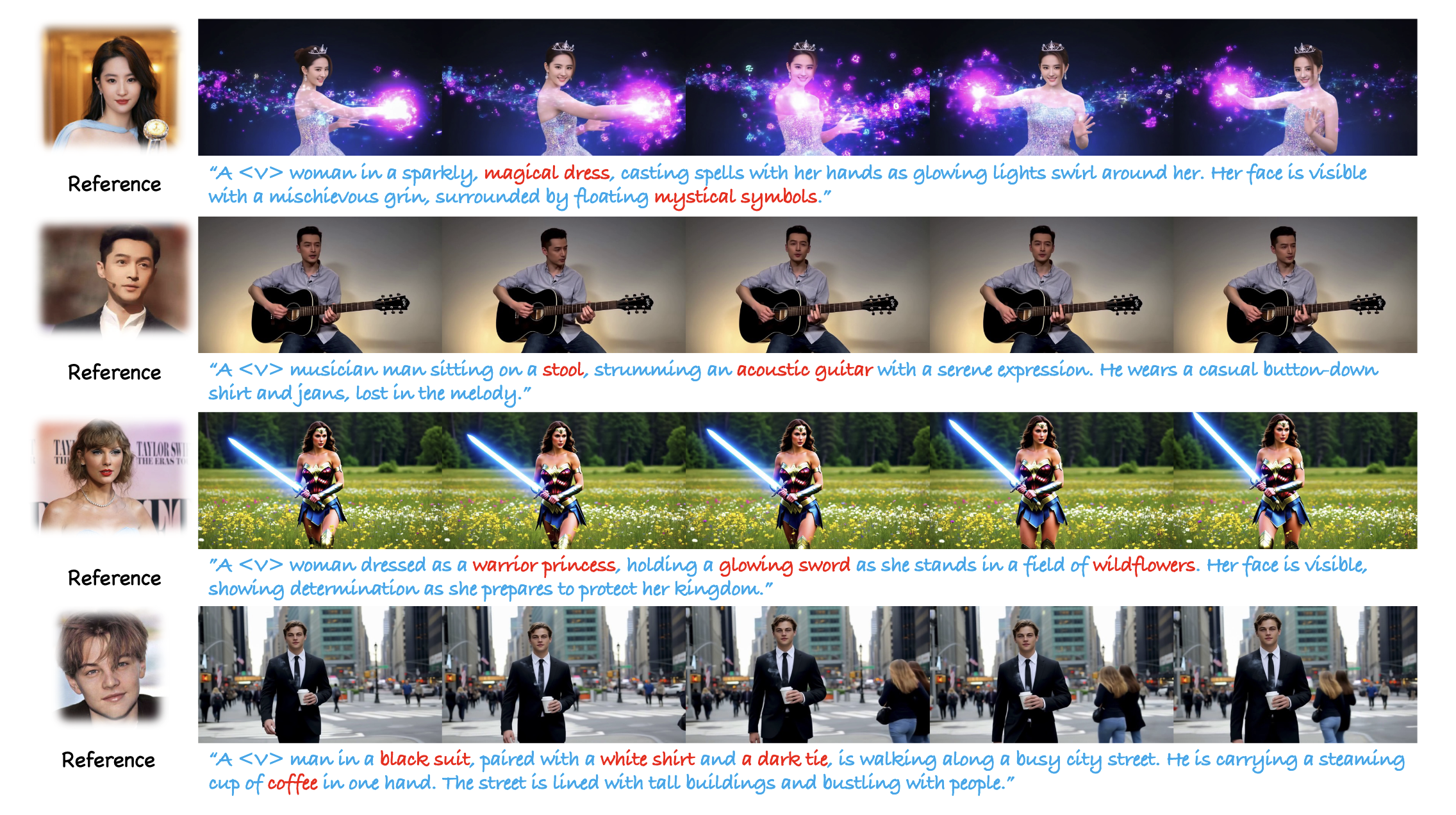

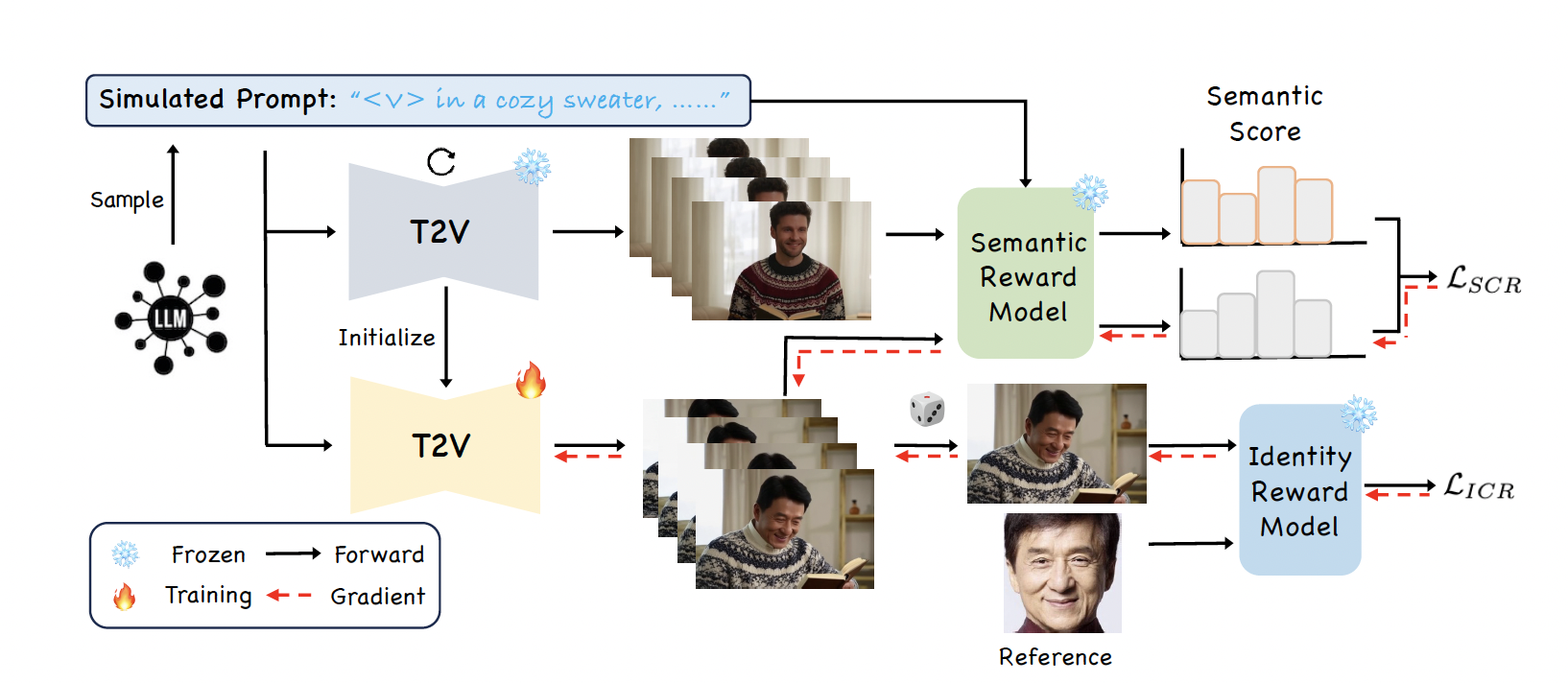

The current text-to-video (T2V) generation has made significant progress in synthesizing realistic general videos, but it is still under-explored in identity-specific human video generation with customized ID images. The key challenge lies in maintaining high ID fidelity consistently while preserving the original motion dynamic and semantic following after the identity injection. Current video identity customization methods mainly rely on reconstructing given identity images on text-to-image models, which have a divergent distribution with the T2V model. This process introduces a tuning-inference gap, leading to dynamic and semantic degradation. To tackle this problem, we propose a novel framework, dubbed PersonalVideo, that applies a mixture of reward supervision on synthesized videos instead of the simple reconstruction objective on images. Specifically, we first incorporate identity consistency reward to effectively inject the reference's identity without the tuning-inference gap. Then we propose a novel semantic consistency reward to align the semantic distribution of the generated videos with the original T2V model, which preserves its dynamic and semantic following capability during the identity injection. With the non-reconstructive reward training, we further employ simulated prompt augmentation to reduce overfitting by supervising generated results in more semantic scenarios, gaining good robustness even with only a single reference image. Extensive experiments demonstrate our method's superiority in delivering high identity faithfulness while preserving the inherent video generation qualities of the original T2V model, outshining prior methods.

@article{li2024personalvideo,

title={PersonalVideo: High ID-Fidelity Video Customization without Dynamic and Semantic Degradation},

author={Li, Hengjia and Qiu, Haonan and Zhang, Shiwei and Wang, Xiang and Wei, Yujie and Li, Zekun and Zhang, Yingya and Wu, Boxi and Cai, Deng},

journal={arXiv preprint arXiv:2411.17048},

year={2024}

}